The Apple Vision Pro orders just opened (US only), and they also uploaded a new guided tour, which gives us a chance to reflect on what kind of experiences Apple is putting front and center.

In the VR community there has been much ado about Apple’s choice to entirely refuse the industry jargon: there is no VR, MR, XR, AR, it’s all spatial computing. They’ve been accused of making up new words to obfuscate what they’re offering. We think it’s more subtle than that.

First of all, naming things matter. Some of the experiences we build can be considered part of the “metaverse”, but that’s not a term we use, because it doesn’t have a good technical definition, and because it reminds people of sleazy operations. Some think of the Meta advertisements, some think of literal scams. Those scams that are so pervasive that I don’t dare to mention them, lest I attract their spam.

Apple systematically chooses feature names that represent the value added for the user’s experience, while simultaneously obfuscating the technical details. They don’t want us to know the DPIs of a screen, they just tell us it’s Retina. It doesn’t matter very much to Apple that competitors make screens with better resolution, if it’s Retina it’s good enough, you’re not going to see the individual pixels. It’s annoying for us nerds, but it seems to work just fine.

In some ways it’s similar with Spatial Computing, it’s technically indisputable that the Apple Vision Pro is a VR headset with a Mixed Reality passthrough function, just like the Meta Quest 3. But what Apple is doing is not only obfuscation, and they certainly didn’t come up with the term. What they’re trying to communicate is that the advantage of computing on a headset rather than on a laptop is going to be spatial in nature. Spatial computing is not a new term at all, the essays written by Timoni West at Unity were an inspiration for my dissertation, and then for the founding of this company. The fundamental idea is to use the dimensionality of the interface for communication between the user and the computer. Information doesn’t have to be limited to a 2D screen, and most importantly user input doesn’t have to be limited to discrete actions. It’s still hard work, but we can enable natural interactions that allow us to think gesturally. Let me gesture that I want a piece of machinery to be ✋ this 🤚 big, and have the computer figure out how big that is in numbers. This is the kind of use case that Sony is targeting with their new VR headset.

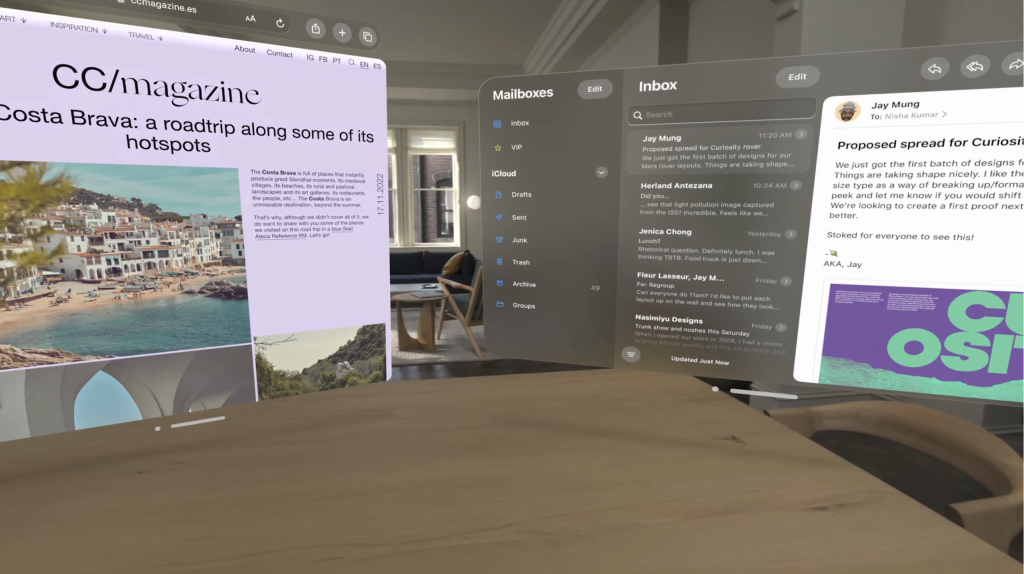

Looking at the Apple developer documentation, a lot of work has gone into making the creation of this kind of experience possible, but they’re not focusing their presentation on that at all. All that the user is seen doing is positioning virtual windows around them.

This is extremely similar to what was possible with Windows Mixed Reality or with the Quest. On the Hololens the visual fidelity was just not enough, and the field of view was tiny. On the Quest 2 the visual fidelity was still too low, it is much improved on the Quest 3, but it is still somewhat worse than just staring at a screen. Looking at the specs the Apple Vision Pro might finally be able to pull of this use case.

However, it’s not at all the kind of spatial computing that I described above.

Instead, it harkens back to the old days of the classic Mac Finder, and in some ways to the design of Jef Raskin. The idea is that rather than following the hierarchical organization of the file system, the interaction between the user and the computer will determine a spatial organization of the information that is being worked on, that will mirror a spatial conceptualization in the user’s mind.

A lot of the modern window management solution that Apple has added to both the Mac and the iPad, like Stage Manager and Mission Control, have enabled users to experiment with this kind of paradigm. There is also a $9.99 Mac spatial desktop environment called Raskin as an homage. Raskin’s son Aza also developed Tab Candy, which brought spatial tab management to Firefox.

I think the verdict is still out on how useful this kind of spatialization is, but I think it’s clear that it is part of what Apple is going for. With any immersive technology we must always remember that the real immersion is in the user’s mind, we can feel present in a novel as much as in VR. As a company we are sticking to the other kind of spatialization, where the spatial information is intrinsic to the problem domain, whether it’s reproducing historical artifacts, simulating the propagation of sound in an environment, or assembling a machine in the correct order. Apple Vision Pro makes both of them much easier to develop. You know, when we’ll actually get one here in Italy 😀

Leave a Reply